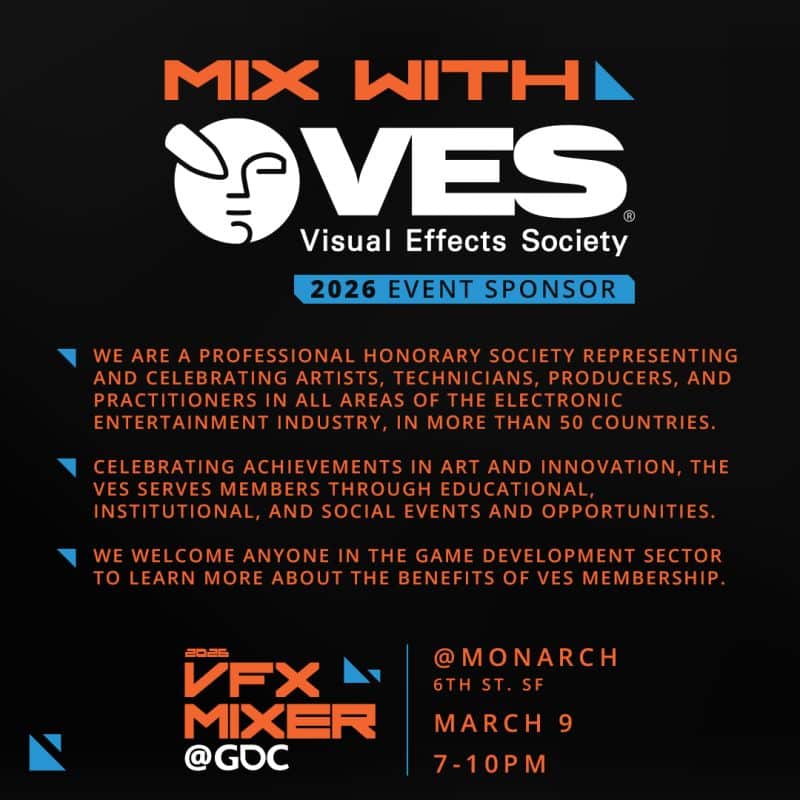

Upcoming Events

4 events found.

2 events found.

Quick Links

Sponsorship

Explore opportunities to connect our members with your brand’s story.

Virtual Production Resources

Stay ahead of the curve with our knowledge hub showcasing cutting-edge innovations, industry-leading practices, and captivating real-world stories.

Starting a Career in VFX?

Plan for a thriving VFX career with the support of our mentorship network, resources, and educational presentations.

Archive Highlights

Discover how far VFX has evolved over time with our exclusive members-only library of expert interviews and special collections.

Advance Your VFX Career

Dive into our job board and learn strategies to help you succeed on your search journey.