2016 VES BayArea Summit

View Full Event Page for details

By TREVOR HOGG

Images courtesy of Netflix.

When a young bride becomes an unexpected offering to a dragon, both the creature and the royal family that betrayed her get more than what they bargained for as she has no intention of carrying on the sacrificial tradition. This is the premise for the Netflix production of Damsel directed by Juan Carlos Fresnadillo (Intruders) and starring Millie Bobby Brown, Ray Winstone, Nick Robinson, Shohreh Aghdashloo, Angela Bassett and Robin Wright. Dividing the visual effects work for the dark fantasy feature were supervisors Nigel Denton-Howes and Nicholas Brooks. “I came in at the beginning of post-production to help bring the dragon along because my background is doing creature stuff,” Denton-Howes states. “The original supervisor was more of an environments person.”

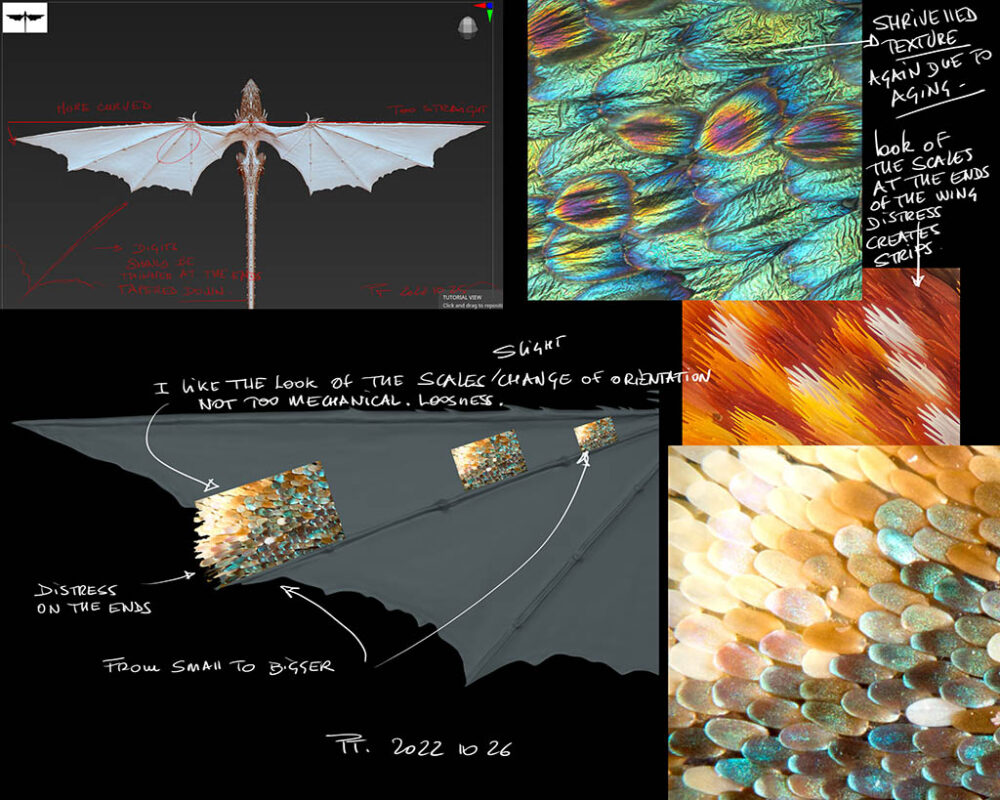

The notes of Production Designer Patrick Tatopoulos regarding the wings of the dragon.

Responsible for the production design was Patrick Tatopoulos. who has made a name for himself as a creature designer. “Patrick was brought back as well in post, which is unusual,” Denton-Howes notes. “I managed to get him to work with the artists at One of Us, and I finished off the look development and all of the details that are needed to make [the dragon] look real when you get into the shots.” The desire was not to go for a lizard-inspired dragon like Game of Thrones. “Our dragon is much closer to a panther, which is why when we brought her into the environments and caves, she is just as comfortable running around the caves as she is flying around them. Whereas your stereotypical dragon is lumbering on the ground and graceful in the air,” Denton-Howes explains. Tatopoulos’ designs for the dragon were refined with the original version having a strong graphical orange line going down the flanks and back of the neck. “We followed the line to the spine and tail. That allows her to stand out in the caves. But the whole textural approach is that she is part of this environment and is supposed to blend in,” Denton-Howes says.

Great attention was spent by Production Designer Patrick Tatopoulos to get the dragon anatomically correct, such as the hip bones.

“Our dragon is much closer to a panther, which is why when we brought her into the environments and caves, she is just as comfortable running around the caves as she is flying around them. Whereas your stereotypical dragon is lumbering on the ground and graceful in the air.”

—Nigel Denton-Howes, Visual Effects Supervisor

Getting the fire simulations correct was a major responsibility for One of Us, which handled the dragon and was aided by on-set lighting.

Shohreh Aghdashloo provides the voice of the dragon. “There are certain sounds that are awkward for a mouth that big and a muzzle that long to make,” Denton-Howes remarks. “There is some lip sync, and we’re using the jaw a lot, but a lot of the motion and mechanics were actually done with the neck. When she inhales, the neck plates open, and it compresses like bellows when she exhales. We added a shiver to the neck plates to correspond to her emotional state. When she is confident, there is very little flutter in them and when she gets angry, they vibrate like crazy. That informed the sound design.” Something unusual for Denton-Howes was getting an opportunity to work directly with the sound design team. “We did a bunch of loops back and forth of animation and sound tests until we got a final dialogue sound that was going to work.”

The neck plates were utilized to help make it believable that the dragon could speak as well as convey the emotional state.

The dragon was modeled on a panther, meaning that it was equally comfortable moving on the ground and flying.

Environments were enhanced to get a proper interaction with the dragon. “A lot of the environments are CG, but on the sets that were built we added all of the rocks and debris on top of them because they were actually bare,” Denton-Howes says. “When the dragon is walking, she can kick stones, and everything extended in the background is CG. When she is interacting with characters, like when one of the guards gets grabbed, it’s a takeover into an all-CG character. For some of them, the whole shot is CG. When we’re interacting with Millie, like when the dragon’s hand is on her neck, on set there were interactive elements such as claws that could press down to allow her to feel some of it. Then we also bent her skin in 2D to add indentations, and, in the dragon, there was some modeling to push in the pads of the thumbs and fingers to make them squishy so you can feel that the two are really touching each other.” Each cave was distinct. “One had stalactites and stalagmites. The main action area has giant columns and looks like a cathedral. Then there is the crystal cave that Millie climbs up. It’s done so you don’t feel as if you’re in the same place all of the time.”

Each cave was treated as a different environment.

“A lot of the motion and mechanics were actually done with the neck. When she inhales, the neck plates open, and it compresses like bellows when she exhales. We added a shiver to the neck plates to correspond to her emotional state. When she is confident, there is very little flutter in them, and when she gets angry, they vibrate like crazy. That informed the sound design. We did a bunch of loops back and forth of animation and sound tests until we got a final dialogue sound that was going to work.”

—Nigel Denton-Howes, Visual Effects Supervisor

The task for visual effects was to refine the details for the dragon.

Light continuity was the biggest issue for when Elodie (Millie Bobby Brown) is tossed into a crevasse that leads to the caves inhabited by the dragon. “You were starting at one place and knew what was going to be at the bottom,” Denton-Howes describes. “They were totally different stages and sets, and you’re telling a story of moving through space with bespoke shots where no two shots are the same, so you’re not reusing anything other than the digital double.” Assisting the cave lighting were glow worms. “Glow worms don’t have magical healing properties, but they actually exist. The bluish white light was part of the production design, and Larry Fong (Kong: Skull Island), our DP, ran with that. Throughout the whole thing we were trying to be photographic. When Millie falls down and has the pomander, it goes to black and slowly comes back into lighting. The idea is that your eyes are adjusting to the dark. We were trying to find photographic reasons for there to be light, and glow worms were one of them. Even in the main caves, it was a tricky lighting scenario on the set because Larry didn’t have a lot of choice of how he lit because the stages were small.”

Modern-day elements like cruise ships had to be painted out.

“Castles are like digital people where everybody knows what they look like, so you know when it’s not quite right. We did a lot of work on that, balancing fantasy with realistic. Initially, [director] Juan Carlos [Fresnadillo] wanted the castle to be clean and beautiful. but when you do that it doesn’t look real. You need to grunge the castle up and allow it to have a couple of centuries of weathering, but it’s still beautiful and magnificent.”

—Nigel Denton-Howes, Visual Effects Supervisor

Because of an actual drought, the colors in the plate photography had to be enhanced by Rodeo FX to make Aurea appear lush.

Primary vendors for the 1,202 visual effects were One of Us, who was responsible for the dragon, Rodeo FX, who did a lot of environments and glow worms, Pixomondo, who handled the dragon, dragon lair, the opening and end sequences, and Important Looking Pirates, who created the harbor environment and Elodie’s homeland. Other contributions came from The Yard VFX, Rising Sun Pictures, Rebel Unit, Atomic Arts, Primary VFX, NetFX and TPO VFX. “Later in reshoots, we added the opening scenes of Elodie’s homeland as visual contrast, as well as for storytelling reasons,” Denton-Howes states. “When you get into Aurea, it needs to look realistic but really lush and beautiful. In the grade, [director] Juan Carlos Fresnadillo pushed it into gold and warmed things up even further, which is a subtle change.”

Looming over the castle is the Stone Mountain, which was inspired by the tooth of a cat.

“When we’re interacting with Millie [Bobby Brown], like when the dragon’s hand is on her neck, on set there were interactive elements such as claws that could press down to allow her to feel some of it. Then we also bent her skin in 2D to add indentations, and, in the dragon, there was some modeling to push in the pads of the thumbs and fingers to make them squishy so you can feel that the two are really touching each other.”

—Nigel Denton-Howes, Visual Effects Supervisor

Weathering had to be added to the castle to make it appear more believable.

Atmospherics were pivotal in obscuring the Dragon Gate to the point that the viewer would not be sure if a real dragon was staring right at them.

A lighting source in the caves are glow worms that have been given healing properties.

Rodeo FX had to replicate a partial set for the crystal cave so it would be appear to be a mountainous climb for Elodie.

As for the Stone Mountains that loom over the castle, the feline anatomy was an inspiration, thereby tying the ominous natural landmark with the design of the dragon. “If you were to zoom out, the main mountain is analogous to a tooth of a cat, and for the lower mountains, you could put a jaw of a cat there,” Denton-Howes reveals. “The base of the castle is real in close-up shots extended up, and for most shots it’s entirely CG. That was time-consuming to do. Castles are like digital people where everybody knows what they look like, so you know when it’s not quite right. We did a lot of work on that, balancing fantasy with realistic. Initially, Juan Carlos wanted the castle to be clean and beautiful, but when you do that it doesn’t look real. You need to grunge the castle up and allow it to have a couple of centuries of weathering, but it’s still beautiful and magnificent.”

By CHRIS McGOWAN

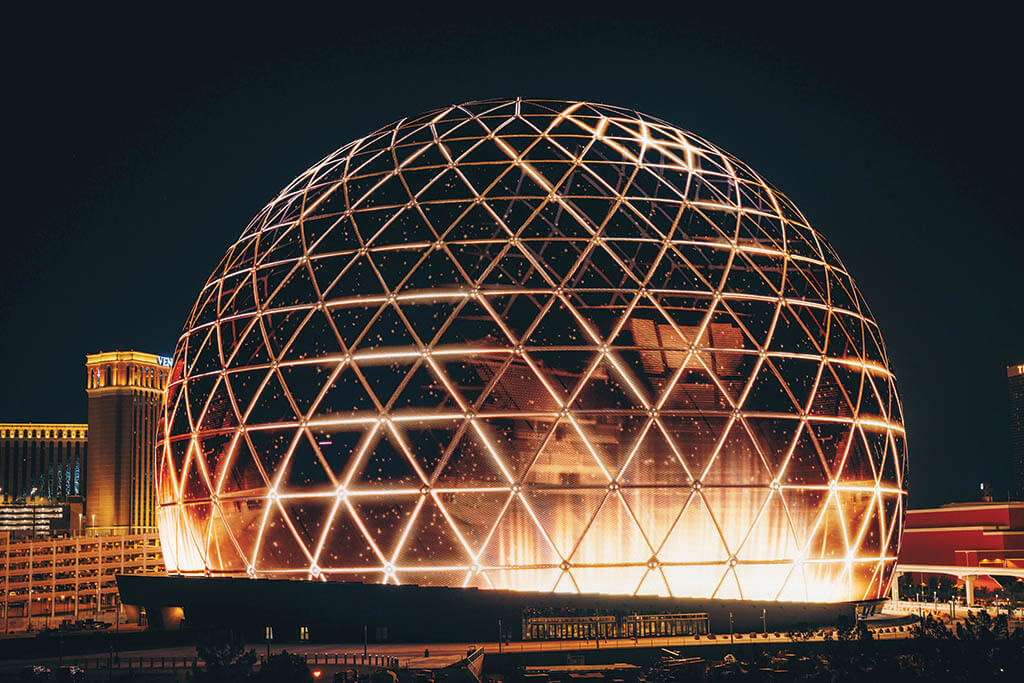

The newest addition to the Greater Las Vegas skyline is the 366-foot-tall Sphere. Its exosphere, the exterior shell of Sphere, has 580,000 square feet of LED panels that morph into all types of images. Sphere’s images range from a giant eyeball and leaf-like color bursts to an architectural lattice and a vivid moon. The Rockettes’ kicking and dancing also fill the Sphere and seem particularly well-suited to light up a Las Vegas night. (Photos courtesy of Sphere Entertainment)

On the outskirts of the Las Vegas Strip, a 366-foot-tall eyeball gazes out at the urban landscape. The traffic-stopping orb, simply named Sphere, has an exosphere of 580,000 square feet of LED panels that morph into the moon, an immense pumpkin, vast fireworks and much more.

While the exterior of Sphere is now an imposing part of the Greater Vegas skyline, its interior is an immersive, scaled-up entertainment destination with seats for 17,600+. Films, concerts and events are displayed on the largest high-resolution LED screen in the world, an arena-sized canvas for live action and visual effects.

The wraparound 16K x 16K resolution interior display is 240 feet tall, covers 160,000 square feet and is comprised of 64,000 LED tiles manufactured by Montreal-based SACO Technologies. The audio system, powered by Berlin’s Holoplot, uses 3D audio beam-forming technology and wave-field synthesis. Sphere Entertainment’s $2.3 billion project was designed by global architectural design firm Populous.

Sphere Entertainment developed bespoke technology for the outsized format, including its Big Sky 18K x 18K, 120 fps camera system. The Sphere Studios division’s main Burbank campus is dedicated to production and post-production of visuals and mixing of immersive audio for Sphere and houses Big Dome, a 28,000-square-foot, 100-foot-high geodesic dome that is a quarter-sized version of Sphere, for content screening.

The rock band U2 inaugurated Sphere with a five-month-plus residency for “U2: UV Achtung Baby Live at Sphere,” and showed off the venue’s vast creative possibilities for live shows. Director Darren Aronofsky’s immersive 50-minute film Postcard from Earth, which debuted soon after U2’s launch, tells the story of our planet seen from the future. Postcard used the Big Sky camera as well as Sphere’s 4D technologies, including an infrasound haptic system to simulate the rumbles of thunder or a rocket launch and sensory effects like breezes and scents.

Nevada’s most endangered species crowd Sphere’s interior in Es Devlin’s “Nevada Ark” for U2’s show. (Photo: Es Devlin. Courtesy of disguise and U2)

“At its best, cinema is an immersive medium that transports the audience out of their regular life, whether that’s into fantasy and escapism, another place and time or another person’s subjective experience. The Sphere is an attempt to dial up that immersion,” Aronofsky wrote in a press release.

Soon after Sphere’s opening, Autodesk and Marvel Studios teamed up to create an ad celebrating the former’s software and The Marvels film for an Autodesk customer event in Las Vegas. The Mill helped with the VFX, utilizing the Autodesk tools Maya and Arnold. The segment featured a gigantic Goose the flerken (a cat-like creature that transforms into a monstrous alien) on the exterior of Sphere, another massive visual certain to draw attention for miles around.

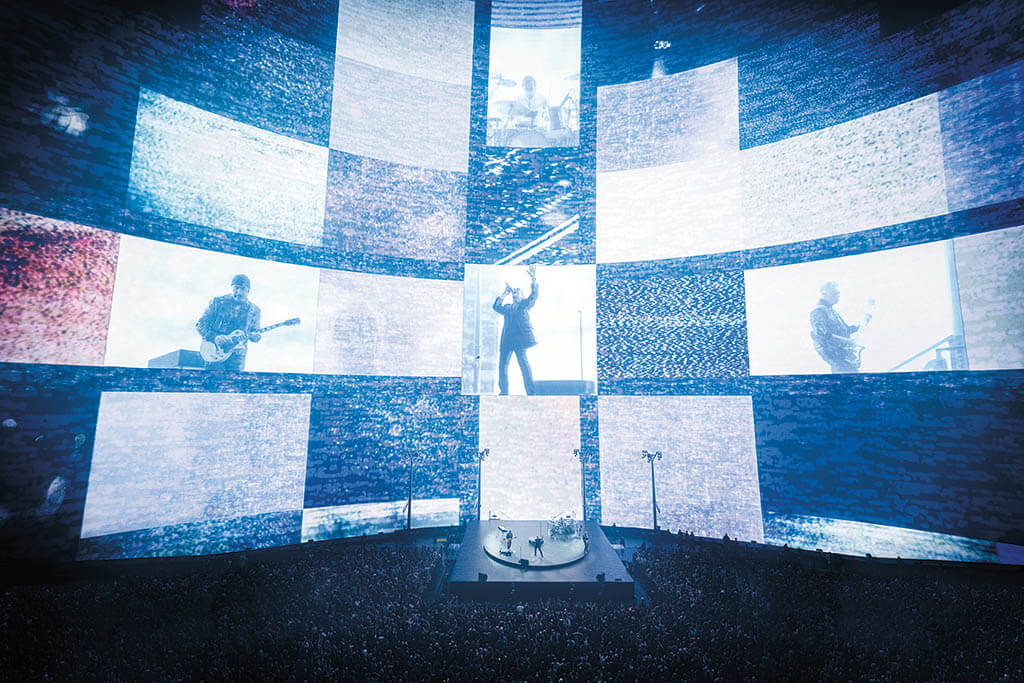

7thSense provides Sphere’s in-house media servers, processing and distribution systems utilized fully on Postcard from Earth. They are the venue’s main playback system. For “U2:UV,” the visuals were coordinated by Treatment Studio and powered at Sphere by a disguise playback system.

U2 AT SPHERE

Brandon Kraemer served as a Technical Director for Treatment Studio on the “U2:UV” residency at Sphere. He comments, “The unique thing that Sphere brings to the concert experience is a sense of immersion. Given that it’s a spherical image format and covers much of your field of view – and it’s taller than the Statue of Liberty on the inside – means it becomes an instant spectacle, and if you leverage that for all its uniqueness, you can’t help but blow audiences’ minds.”

Kraemer recalls, “Willie Williams [U2 Creative Director and Co-Founder of London-based Treatment Studio] contacted me in September of 2022 about the project. That was very early on in the process. Early creative was being discussed then, but just as importantly we started to embark on just how we were going to technically pull this off.”

Kraemer continues, “The majority of the visuals were designed by the artists at Treatment under the creative direction of Williams and Producer Lizzie Pocock. However, there were other collaborators on key pieces as well. Khatsho Orfali, David Isetta and their team from Industrial Light & Magic created an amazing cityscape that deconstructs itself for U2’s new song ‘Atomic City.’ And, he adds, “Marco Brambilla and his team at The Mill in Paris created a unique world for ‘Even Better Than the Real Thing,’ a dense psychedelic collage.”

The newest addition to the Greater Las Vegas skyline is the 366-foot-tall Sphere. Its exosphere, the exterior shell of Sphere, has 580,000 square feet of LED panels that morph into all types of images. Sphere’s images range from a giant eyeball and leaf-like color bursts to an architectural lattice and a vivid moon. The Rockettes’ kicking and dancing also fill the Sphere and seem particularly well-suited to light up a Las Vegas night. (Photos courtesy of Sphere Entertainment)

To capture large-scale, ultra-high-resolution imagery, Sphere Entertainment’s Burbank-based unit, Sphere Studios, developed the 18K x 18K, 120fps Big Sky camera system, used in spectacular fashion by Darren Aronofsky’s Postcard from Earth. (Photo courtesy of Sphere Entertainment)

A massive cross of light is a simple but powerful visual at this scale, part of the band’s “U2: UV Achtung Baby Live at Sphere” residency. (Photo Kevin Mazur. Courtesy of disguise and U2)

There were numerous technical challenges and quite a few diplomatic challenges as well, and these two areas often overlapped. Kraemer explains, “Opening a building and working in a construction site while stepping through rehearsal programming is quite a feat. My hats off to U2’s legendary Production Manager, Jake Berry, for keeping the whole operation moving forward in the face of what were, at times, some serious headwinds. Getting content rendered on that screen has lots of challenges along the way, and we were also very fortunate to have the support of disguise and their [GX 3] servers as the backbone of the playback system. We couldn’t have produced the show we did without their support.” In addition, the show utilized a custom stage, based on a turntable design by Brian Eno, and covered by Yes Tech and ROE panels.

U2’s reaction was very positive, according to Kraemer. “The band put a lot of trust in the teams that Willie Williams put together, and they were pretty blown away by it all.”

DISGUISE

Peter Kirkup, disguise’s Solutions and Innovation Director, recalls, “We first became involved in Sphere through [U2’s Technical Director and Video Director] Stefaan ‘Smasher’ Desmedt. Together with Smasher, disguise has been working on U2 shows for decades, so it was a perfect fit.”

Kirkup adds, “Disguise’s software and hardware powered the visuals that were displayed on Sphere’s wraparound LED screen during the U2 show. First, our Designer software was used to help previsualize and edit the visual content – all brought together by the creative minds at Treatment Studio, including Brandon Kraemer and Lizzie Pocock as well as Willie Williams.”

Disguise’s Designer software allowed the creative team to previs their visuals on a computer with the help of a 3D digital twin of the Sphere stage. “This real-time 3D stage simulator meant ideas could be communicated more clearly and quickly to get everyone on the same page,” Kirkup notes. “Designer also helped the team to sequence the visuals into a timeline of beats and bars – and import audio to lock visuals to the beat. This helped create snappy, rhythmic edits and some extra looping segments that could be pulled in on the fly in case the band decided to do an extra riff on the day of the show.”

Kirkup continues, “Once the visuals were complete, our software split and distributed the 16K video into sections. We were working with one contiguous LED screen but still needed to split the video into sections because of the sheer volume of content involved. We were playing real-time Notch effects and pre-rendered NotchLC content at 60fps across the Sphere’s 256,000,000 pixel, 16K x 16K interior canvas.

“Finally, our GX 3 media servers enabled all individual pieces to be perfectly in sync throughout the show,” Kirkup says. “This technology also allowed us to composite layers of video together in real time. For example, the video feed of the band that cinematic cameras were capturing during the show could be composited into our LED visuals from the Designer software. Each server was also upgraded with a 30-terabyte hard drive, so we had local storage machines for playout and 100GB networking back to the content store for file transfers and media management.”

Kirkup adds, “We furthered our Single Large Canvas workflows, which enable content to be broken up into pieces and distributed across a cluster of machines – essential work to make a project like this come to life. We also introduced some custom color pipeline work for Sphere, adapting our standard color pipeline to match the unique characteristics of the in-house LED system.” Adds Kirkup. “A big challenge was handling such a large volume of content across 256,000,000 pixels – in real time. There were 18,000 people watching the show, and they all had their camera phones ready to broadcast to even more people, so we really had to make sure the show went well.”

Kirkup remarks, “Bono mentioned this during the show, but I believe the most important thing about Sphere is that for the first time, a venue of this scale is being created with musicians in mind. In the past, musicians needed to squeeze into sporting arenas or stadiums that weren’t created for music – they may have had tiny screens or the wrong acoustics. With Sphere, that’s all changed. For real-time graphics and VFX artists, that’s a big trend to watch for in 2024 and beyond. I expect to see more venues designed specifically to highlight 3D visuals. With that, more VFX artists and studios will be pulled in to develop not only movie and TV effects – but incredible visuals for live events, too. The two industries will start to blur.”

7THSENSE

7thSense – a creative software and technology company based in Sussex, England – put together the Sphere in-house playback system and provides hardware for media serving, pixel processing and show control. “Building a first-of-its-kind venue like Sphere brought with it a significant number of challenges that the 7thSense team was keen to dig their collective fingers into,” explains Richard Brown, CTO of 7thSense.

Brown notes, “Managing exceptionally large canvases of playback, generative and live media as a single harmonious system is of utmost importance in a venue of this scale, and it is a workflow and underpinning technology we have been working on for quite some time. With a 16K x 16K canvas size, Sphere placed a priority on accelerating the development of the tools for media playback, multi-node rendering of generative assets and live compositing from multiple ST 2110 streams, as well as for pre-visualizing the show without having access to the full system. Because time in the venue is an incredibly rare commodity, anything that can be done ‘offline’ helps to make the time in the venue more productive.”

The visuals for U2’s “Atomic City,” with VFX work by ILM, includes a stunning deconstruction of Las Vegas going back in time. (Photo: Rich Fury. Courtesy of disguise and U2)

The desert landscape around Las Vegas became a backdrop for U2’s “Atomic City.” (Photo: Rich Fury. Courtesy of disguise and U2)

Marco Brambilla’s dense psychedelic collage “King Size,” put together with the help of the Mill in Paris, is an ode to Elvis Presley that accompanies the U2 song “Even Better than the Real Thing.” (Photo: Rich Fury. Courtesy of disguise and U2)

The interior display of Sphere is 240 feet tall and covers 160,000 square feet with LED panels from SACO Technologies. (Photo: Rich Fury/Ross Andrew Stewart. Courtesy of disguise and U2)

The interior display of Sphere can create huge individual displays for any performer, and the venue uses 3D audio beam-forming technology and wave field synthesis for an appropriately big and precise sound. (Photo courtesy of disguise and U2)

The huge $2.3 billion Sphere has altered the Greater Las Vegas skyline and become an entertainment destination, celebrating its launch in September 2023 with the “U2: UV Achtung Baby Live at Sphere” residency. (Photo courtesy of Sphere Entertainment)

Brown adds, “High-speed streaming of uncompressed media from Network Attached Storage (NAS) is something we have been wanting to do for a long time, but the technology was not sufficiently advanced to support the bandwidth and timely delivery of data until very recently. Fortunately, the use case for this technology aligned very much with the desired workflow at Sphere, giving us the chance to really dig into what could be an industry-changing technology for media production and presentation systems.”

Brown continues, “Managing synchronized media playback across dozens of servers is one thing, but making it straightforward for a show programmer to build the show that spans dozens of servers is quite another. 7thSense developed an Asset Logistics workflow that simplifies what actual movie frames each server streams from the NAS based on representative meta-media used for programming the show timeline.”

Brown explains, “Each server is configured with what section of the dome it is responsible for playing back, and this information, coupled with the name of the movie from the timeline, is used to determine the file path on the NAS that each media server uses to access the appropriate movie frames. This workflow reduces user error and makes timeline programming significantly faster than managing individual movies per server.”

Brown comments that Sphere is the first entertainment venue of its kind when it comes to the size and resolution of the media being presented to an audience. He says, “It is imperative that all media players, generative engines and pixel processors are working in absolute synchronization, or the illusion of immersion is lost for the audience. Worse than that, image tearing or jitter, could cause the audience to become ill because of the immersive nature of the media plane. Everywhere you look, you are surrounded by the media.”

In addition, Brown notes, “Not only is it our first major application of ST 2110, it just happens to be the largest ST 2110 network in an entertainment venue on the planet!” 7thSense has been in the world of immersive presentations in planetaria, domed theaters, museums and theme park attractions since going into business nearly 20 years ago. But what has been created at Sphere is something new, a destination live-event venue, and the technology far surpasses what has been built to date. This hybrid type of entertainment has the potential to create its own category of immersive live show experience. It’s exciting to be part of the team building it from the ground up.”

“I think it’s an experience like no other,” Treatment Studio’s Kraemer says about Sphere. “It was a thrilling experience to be part of the first creative team to produce an amazing show there.

I think ‘U2:UV’ will be a very tough act to follow, but I think there is a tremendous opportunity to give an audience something that is impossible in a stadium or arena show, and I look forward to seeing how this all evolves.”

Chris McGowan

Snoop Dogg at Astro Project motion capture studio in Santa Monica for his “Crip Ya Enthusiasm” music video utilizing the Vicon system and StretchSense gloves. (Image courtesy of Vicon and Astro Project, LLC)

Motion capture, performance capture and volumetric video technologies are rapidly advancing, incorporating AI and ML to a greater extent and focusing on enhancing realism, precision and accessibility. Peter Rabel, Technical Product Manager at Digital Domain, comments, “The trend towards real-time capabilities has become prominent, allowing for immediate feedback and integration into virtual environments, video games and live events. As we integrate artificial intelligence and machine learning as tools to enhance these functions’ capabilities further, it will enable automated analysis and capture of movements in real-time, which will help save time on the process, leading to cost savings. It’s essential for us to stay updated on recent developments and industry trends to understand the current trajectory of these capture technologies as technology continues to evolve so we can better serve our clients.”

VICON: MARKERLESS

Vicon made a splash in 2023 with its Los Angeles SIGGRAPH announcement of the debut of its machine learning (ML) powered markerless mocap. The news came after some three years of research and development focusing on the integration of ML and AI into markerless motion capture at Vicon’s R&D facility in Oxford, U.K. Vicon collaborated on the technology with Artanim, the Swiss research institute that specializes in motion capture, and Dreamscape Immersive, the VR experience and tech company.

“The ability to capture motion without markers while maintaining industry-leading accuracy and precision is an incredibly complex feat,” says Mark Finch, Vicon’s Chief Technology Officer. “After an initial research phase, we have focused on developing the world-class markerless capture algorithms, robust real-time tracking, labeling and solving needed to make this innovation a reality. It was our first step towards future product launches, which will culminate in a first-of-its-kind platform for markerless motion capture.”

On the mocap set of She-Hulk: Attorney at Law with diode suit and Digital Domain’s Charlatan “face-swapping” system. (Photo: Chuck Zlotnick. Courtesy of Marvel Studios)

Finch continues, “What we demonstrated at SIGGRAPH was markerless recognition of the human form – using prototype cameras, software and algorithms – to track six people, with their full body solved in real-time, in a VR experience. This completely the need for participants to wear heavy gear with motion capture markers. As a result, the VR experience is more seamless and believable as the motion capture technology is largely invisible and non-invasive.” Finch adds, “Of the technology we showcased, Sylvain Chagué, Co-Founder and CTO of Artanim and Dreamscape, said, ‘Achieving best-in-class virtual body ownership and immersion in VR requires both accurate tracking and very low latency. We spent substantial R&D effort evaluating the computational performance of ML-based tracking algorithms, implementing and fine-tuning the multi-modal tracking solution, as well as taking the best from the full-body markerless motion capture and VR headset tracking capabilities.’ ”

ROKOKO VISION

Based in Copenhagen, Rokoko had two major announcements on the product front in the last year, “First, with Rokoko Vision, our vision AI solution that allows for suit-less motion capture from any camera. We released the first iteration mainly to get to know the space and gather insights from early use of the product,” CEO and Founder Jakob Balslev comments. “It’s becoming increasingly clear to us what the users need, and we are excited to release more updates on that front.

Rokoko’s Coil Pro is the company’s recent innovation in motion capture hardware, featuring no drift and no occlusion through a fusion of EMF and IMU capture. (Image courtesy of Rokoko)

OptiTrack’s Primex 120 and Primex 120W cameras offer the company’s longest camera-to-marker range for Passive and Active markers. OptiTrack accuracy with more range enables very large tracking volumes for a wide variety of training and simulation scenarios, extreme ground or aerial robotic facilities and larger cinematic virtual production studios. (Image courtesy of OptiTrack)

OptiTrack’s Primex cameras quickly identify and track Passive and Active markers. (Image courtesy of OptiTrack)

He adds, “Second, we unveiled our Coil Pro – the biggest innovation we’ve ever done on the hardware side – and, in my eyes, probably the biggest innovation ever in motion capture. Through a fusion of EMF and IMU capture, the Coil Pro unlocks the holy grail of motion capture: No drift and no occlusion. With drift-free global position over time and no need for line of sight from optical solutions, the Coil Pro is the best of both worlds of mocap [IMU and optical]. The underlying platform, named Volta Tracking Technology, fuses EMF and IMU and will be at the core of all our motion capture hardware solutions going forward.”

DIGITAL DOMAIN: CHARLATAN

Digital Domain is further developing its machine learning neural rendering software Charlatan (sometimes referred to as a face-swapping tool). “Acknowledging the expense and time associated with traditional methods, including our top-tier Masquerade [facial capture] system, we developed Charlatan to introduce efficiency and affordability,” Rabel comments. “Several years ago, Charlatan was created using machine learning techniques. This innovative approach involves utilizing real photography of an individual’s face and applying enhancements, seamlessly transferring it to another person’s face, or even manipulating discrete aspects such as aging or de-aging. Recently, we have been developing Charlatan 3D, which evolves this technology to produce full 3D geometry from this process but at a lower cost and simpler capture conditions than Masquerade. In essence, Charlatan represents a significant stride towards streamlining the creation of lifelike digital humans with unparalleled realism.”

OPTITRACK: NEW CAMERAS

OptiTrack provides tracking solutions that vary in use, including AAA game studios, medical labs, and consumer and prosumer budget solutions. In November the firm announced its three most advanced motion capture cameras; the PrimeX 120, PrimeX 120W and SlimX 120. “With higher resolution and increased field of view, these new additions enable larger tracking areas for a wider variety of training and simulation scenarios and larger cinematic virtual production studios,” says Anthony Lazzaro, Senior Director of Software at OptiTrack. All three cameras, which are designed and manufactured at OptiTrack’s headquarters in Corvallis, Oregon, feature their highest-yet resolution, 12 megapixels. With the PrimeX 120, customers benefit from a standard 24mm lens while the PrimeX 120W comes with an 18mm lens with a wider field of view. [And] we have 24mm or 18mm wide lens options available with the Slim X 120.”

Lazzaro continues, “We also released a more informative and intuitive version of our mocap software, which is now compatible with all OptiTrack mocap cameras. Motive 3.1 is aimed at simplifying high-quality, low-latency performance motion tracking, offering users easy-to-use presets and labeling for tracked items that deliver the best possible motion data while saving time and eliminating extra steps. Customers also have greater visibility into possible issues and can automatically resolve against the harshest of tracking environments.”

STRETCHSENSE: MOCAP GLOVES

Founded in Auckland in 2012, StretchSense took on the mission to build the world’s best stretchable sensors for comfortably measuring the human body. “Building on top of our sensor technology, in 2019 we pivoted the business to focus on motion capture gloves for AAA studios, indie studios, streamers, VR/AR, live shows and more,” explains StretchSense Co-Founder and VP Partnerships & New Markets Benjamin O’Brien.

“Our Studio Gloves are incredibly unobtrusive, with a less than 1mm thick sensor layer on top of breathable athletic fabric, and a small transmitting module,” O’Brien says. “This is more than just a comfort and style thing though; it means that our gloves don’t get in your way, and you can continue to type, use a mouse, hold a prop, use your phone or just get a pizza from the door. Once you start to think about mixed-reality applications, this becomes even more critical, as our gloves allow you to switch seamlessly between interacting with virtual spaces and the real world.”

O’Brien adds, “Our mission is to democratize motion capture, allowing independent content creators and streamers to create incredible and immersive stories and experiences. To achieve this, we have a long-term goal of getting our gloves down to a true consumer price point, which will really open up the space. At $795, we think our latest StretchSense Studio Glove is the biggest step the industry has ever taken towards this goal; less than two years ago, something with similar performance would have cost well over $5,000.”

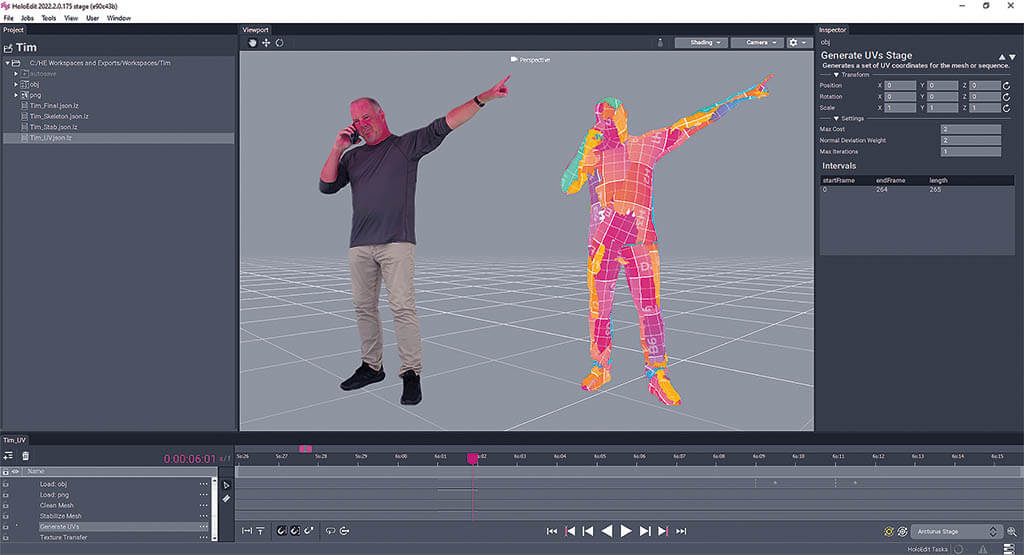

ARCTURUS AND VOLUMETRIC VIDEO

Based in Beverly Hills, Arcturus Studios was founded in 2016 by veterans of DreamWorks, YouTube, Autodesk, Netflix and other notable companies. “Together, they saw the potential for volumetric video and decided to work together to steer its development,” recalls Piotr Uzarowicz, Head of Partnerships and Marketing at Arcturus. “That led to the creation of the HoloSuite tools, consisting of HoloEdit – a tool that can edit the 3D performances of performers recorded with volumetric video – and HoloStream, software that can compress a completed volumetric video file and stream it to any 2D or 3D device, even if the broadband signal is unstable. Together, HoloSuite has helped make it possible to use volumetric video for everything from e-commerce to AR projects to virtual production and more.”

Uzarowicz continues, “Arcturus took over Microsoft’s Mixed Reality Capture Studios (MRCS) business [in 2023], including the development of that capture system – the most sophisticated in the world – as well as the rights to maintain and supply MRCS licenses to studios around the world. That has put Arcturus in a unique position where it is now developing for all stages of volumetric video, from the capture and editing all the way to the final distribution.”

“One of our goals has always been to make volumetric video more accessible. We’re looking at new ways to make it easier to capture volumetric videos using fewer cameras, including the use of AI and machine learning. With the MRCS technology and our licensees, we are working with some of the best and most creative content creators in the world to find where the technology can evolve and improve the production experience,” comments Uzarowicz. “We just released a new video codec called Accelerated Volumetric Video (AVV) that makes it possible to add more volumetric characters to a digital environment. With the MRCS technology, the quality of a captured performance is better than ever. Volumetric video is constantly evolving,” he adds.

OptiTrack’s Motive 3.1 advanced motion capture software can be paired with any of OptiTrack’s motion capture cameras, including the premium PrimeX, Slim or low-cost Flex series. Motive 3.1 also offers trained markersets, enhanced sensor fusion and pre-defined settings. (Image courtesy of OptiTrack)

StretchSense makes motion capture gloves for major and indie studios, streamers, VR/AR and live shows. (Image courtesy of StretchSense)

StretchSense’s mocap gloves are unobtrusive, with a less than 1mm-thick sensor layer on top of breathable athletic fabric and a small transmitting module. StretchSense’s $795 Studio Glove is a step toward the company’s goal of getting its gloves down to a true consumer price point. (Image courtesy of StretchSense)

“The trend towards real-time capabilities has become prominent, allowing for immediate feedback and integration into virtual environments, video games and live events. As we integrate artificial intelligence and machine learning as tools to enhance these functions’ capabilities further, it will enable automated analysis and capture of movements in real-time, which will help save time on the process, leading to cost savings.”

—Peter Rabel, Technical Product Manager, Digital Domain

Arcturus took over Microsoft’s Mixed Reality Capture Studios (MRCS) business in 2023, including development of the capture system, as well as rights to maintain and supply MRCS licenses to studios worldwide. Arcturus also now develops for all stages of volumetric video.

(Image courtesy of Arcturus)

Arcturus’s HoloSuite tools consist of HoloEdit – a tool that can edit the 3D performances of performers recorded with volumetric video – and HoloStream, software that can compress a completed volumetric video file and stream it to any 2D or 3D device, even if the broadband signal is unstable. With HoloSuite it’s possible to use volumetric video for e-commerce, AR projects and virtual production. (Image courtesy of Arcturus)

MOVE AI

Move AI has announced the official release of a single-camera motion capture app, Move One, the company revealed in late November. “The app is now available to animators and creditors looking to bring realistic human motion to their 3D characters,” said the company. “Move AI makes it easy to capture and create 3D animations.”

AI/ML

“Arcturus is currently experimenting with AI and machine learning in several ways. From the moment we were founded, one of our main goals has always been to make volumetric video more accessible, and AI can help us do that in a few different ways,” Uzarowicz comments. “Among other things, one of the areas we are currently focusing on in our R&D is using AI to help us capture the same level of quality – or better – we can currently capture but use fewer cameras. One of the things that makes our MRCS technology the best in the world is the software that converts the multiple captured recordings into a single 3D file. With AI, we hope to improve that process.” Regarding AI/ML, O’Brien says, “We are seeing many companies using motion capture to create their own proprietary databases for training or tuning generative AI models, and we are looking at how we can lean into this. Finally, we are ourselves constantly investing in machine learning to improve the data quality [of ] our products.”

“Given our experience with machine learning, we see Gen AI as a tool like any other in our toolbox, enabling us to create artistically pleasing results efficiently in support of the story,” Digital Domains’s Rabel says. “We have found that the combination of powerful tools, such as machine learning and AI, with our artists’ creative talent produces the photorealistic, relatable, believable and lifelike performances we are striving for. We feel the nuances of an actor’s performance in combination with our AI and machine learning toolsets are critical to achieving photorealistic results that can captivate an audience and cross the uncanny valley.”

Lazzaro comments, “OptiTrack already uses ML algorithms to derive optimal solutions for things like continuous calibration and trained markersets. Continuous calibration takes existing visible objects in a scene, i.e. markers, and uses that data to determine how to make small adjustments to fix calibration issues related to bumps, heat or human error. Trained markersets allow you to feed marker data into an algorithm to make a model that can track objects that were previously not trackable, such as trampolines, jump ropes and other non-rigid objects. Lazzaro adds, “Advances in AI and ML will continue to shape the way that objects are tracked in the future.” Rokoko’s Balslev notes, “AI/ML will fundamentally change the motion capture space. Text-to-motion tools are emerging and maturing and will eventually completely disrupt the stock space for online marketplaces and libraries. These tools will however not be able to replace any custom mocap that requires acting and specific timing.”

Our mission is to democratize motion capture, allowing independent content creators and streamers to create incredible and immersive stories and experiences. To achieve this, we have a long-term goal of getting our gloves down to a true consumer price point, which will really open up the space. At $795, we think our latest StretchSense Studio Glove is the biggest step the industry has ever taken towards this goal; less than two years ago, something with similar performance would have cost

well over $5,000.”

—Benjamin O’Brien, Co-Founder and

VP Partnerships & New Markets, StretchSense

Move AI offers a single-camera motion capture app, Move One, for animators looking to bring realistic human motion to their 3D characters, making it easy to capture and create 3D animations. (Images courtesy of Move AI)

VR AND MOCAP

“We [Vicon and Dreamscape Immersive] are together mapping out just how far markerless mocap can go in providing a more true-to-life adventure than any other immersive VR experience by allowing for more free-flowing movement and exploration with even less user gear,” Vicon’s Finch comments. “Dreamscape has said it has long awaited the time when markerless could break from concept and into product, where the technology could support the precision required to realize its amazing potential. We’re testing that potential together now.” Finch adds, “Seeing people’s initial reactions to VR when they’re fully immersed is remarkable. The fantasy-reality line blurs, the more freedom you have in a VR space, which is reduced when a user is tethered and they feel the pull of the cable or know they’re wearing a backpack.” He continues, “There’s also the customer experience element that’s a central driver in all of this. People’s experience with markerless is a big wow moment. Markerless is going to lead to more magic – more wow.”

Lazzaro explains, “Mocap is used in all sorts of VR and AR applications. Typically, home systems use what is called inside-out tracking to have a head-mounted display [HMD] track the world around a user. This works great for HMD and controller tracking, but can’t be used to see other people wearing HMDs. OptiTrack uses an approach called outside-in tracking where we track the HMD, controllers and props using external cameras. This allows users to build location-based VR experiences in which multiple people can go through an experience together or engineers can work on designs in VR as a group.”

OUTLOOK

“We think these markets [motion capture, performance capture and volumetric video] will all be changed with the continued increase in accessibility,” comments StretchSense’s O’Brien. You can now do full-body mocap for less than the cost of a new iPhone, and basic volumetric capture can now be had for free on that same iPhone. This means different things for different markets: On a major AAA studio, you are going to see mocap happening on all of the people all of the time, and also on more ambitious projects that have more animated content than ever before. For independent creators, the financial costs of getting into mocap are dropping away so more people can join the space. Finally, there are millions of streamers worldwide who are getting new ways to connect with their community and make money while doing so by stepping into virtual worlds.”

“Mocap has a bright future in a variety of markets,” OptiTrack’s Lazzaro says. “This includes but is not limited to movies, video games, medical applications, robotics, measurement and VR. Mocap techniques are also becoming more commonplace with V-Tubers and other prosumer applications.”

By TREVOR HOGG

Images courtesy of Netflix.

A major visual effects undertaking was constructing the environment and crowd at Tsinghua University watching the torture of intellectuals during the Chinese Cultural Revolution.

A computational conundrum occurs when the motion of three celestial bodies mutually influences each other’s gravitation pull. This serves as the premise for the science fiction series 3 Body Problem by novelist/series writer Liu Cixin, where an alien race living on an environmentally unstable planet caught between a trio of suns sets in motion a plan to invade Earth with the assistance of human conspirators. Adapting the novels for Netflix is Game of Thrones duo, David Benioff and D.B. Weiss, along with True Blood veteran Alexander Woo. The first season of 3 Body Problem encompasses eight episodes that feature major visual effects spanning environment builds, a multi-dimensional supercomputer compressed into a proton, a sliced and diced oil tanker, characters being rehydrated/dehydrated and a virtual reality game that literally feels real. The epic scope of the project required the creation of 2,000 shots by Scanline VFX, Pixomondo, BUF, Image Engine, Screen Scene and El Ranchito. An in-house team took care of additional cleanups, which ranged from a character blinking too much to having to paint out an unwanted background element.

Previs was an indispensable tool. “It’s a complete game-changer being able to do everything in Unreal Engine,” Visual Effects Supervisor Stefen Fangmeier states. “We did nearly no storyboarding. It was essentially camerawork. The funny thing was they were trying to get me to use a camera controller, and I said, ‘No. I’m a curve guy.’ I set a keyframe here and a keyframe there and interpolate. I even reanimated characters, which you can do in Unreal Engine in the most elegant way. You can take a couple of big performances and mix them together; it’s a fantastic tool. We worked with NVIZ in London who would prep all of these scenes, do the animation, then I would go shoot and light it; that was a great joy for me, being interactive. What was so interesting about 3 Body Problem was there is an incredible variety of work.”

Vedette Lim as Vera Ye in one of the many environments given the desired scope and vastness through digital set extensions.

A unique cinematic moment involves an oil tanker being sliced by nanowires as part of an elaborate trap to capture a hard drive belonging to a cult that supports the San-Ti invading Earth. “People get sliced every 50 cm, which we did mostly with digital doubles and a few practically built hallways and interior buildings. When you slice something that heavy vertically at 50 cm increments, the weight of what’s above it keeps it in place until the bow hits the shoreline. The dish on top of it collapses into the Panama Canal, which we created as a full CG environment,” Fangmeier states.

Opening the series is a massive crowd gathering at Tsinghua University during the Chinese Cultural Revolution to watch the torture of intellectuals, and because of the controversial nature of the subject matter shooting in Beijing was not an option. “Ultimately, we built the environment from photography and then took some liberties,” Visual Effects Producer Steve Kullback describes. “We wanted it to be realistic, but how big is the quad? What did the buildings actually look like? I don’t think anybody is tracking it quite that precisely, but what we ended up with is having 100,000 screaming students in front of us, and that was all shot quite virtually with a stage set that was built out and extended. It was an array of bluescreens on Manitous that were set up to move around and reposition behind 150 extras.” Crowd tiling was minimal. “We did one shot, which was a poor artist’s motion control. The director wanted a shot where the camera is pushing out towards the stage over the crowd, so what we did was start in the foreground pushing over it, repeat the move pushing over it and move everyone up. We put the pieces together, and it worked quite well. We didn’t have a motion control crane, just a 50-foot Technocrane and a good team that was able to repeat their moves nicely,” Kullback says.

Bai Mulin (Yang Hewen) sits alongside Young Ye Wenjie (Zine Tseng) who makes a fateful first contact with the San-Ti, which sets their invasion plans in motion.

A radar dish test at Red Coast Base kills a flock of birds that were entirely CG.

Sophon (Sea Shimooka) is an avatar in a VR game created by the San-Ti to illustrate the destructive environmental impact of living next to three suns.

The reflective quality of the VR headset meant that extensive photogrammetry had to be taken so each set piece could be reconstructed digitally.

One of the major environments simulated in the VR game is the observation deck of the Pleasure Dome constructed by Kublai Khan.

Another key environment build was the Red Coast Base where astrophysics prodigy Ye Wenjie makes first contact with the San-Ti in the 1960s, which sparks an invasion conspiracy. “For Red Coast Base, we had part of an observation base in Spain that was on a mountaintop, and it was a windy day with no rain, so we had some nice sunsets and great clouds,” Visual Effects Supervisor Rainer Gombos remarks. “Some of the buildings didn’t match what we wanted, and the main building was missing the large radar dish. We only had the base built for that. We had some concepts from the art department for how the extensions should work, and then we did additional concept work once we had the specific shots and knew how the sequence would play out.” The years leading up to the present day have not been kind to the Chinese national defense facility. “The roofs have collapsed, so we had to design that. It had to look like winter and cold when it was actually a hot spring day with lots of insects flying around, which had to be painted out. There is a sequence where the radar dish is being used for some test, and birds are flying from the forest and get confused by what is happening, fly close to the dish and die. There were a lot of full CG shots there and CG birds that had to be added. Also, one of the characters revisits the base to commit suicide, so we had to introduce a digital cliff that allowed her to walk up to the side of the dish and look over,” Gombos adds.

30 million Mongol soldiers appear in front of the Pleasure Dome before being lifted into the air because of the gravitational pull of the three suns.

Simulating what life is like on Trisolaris is a virtual reality experience developed by the San-Ti that demonstrates the global catastrophes caused by living in close proximity to three suns. “It was described as a simple arid desert landscape,” Fangmeier explains. “The more unique aspect of that was a certain lighting change. One sun, small and in the distance, was rising, and then suddenly that goes away and it’s night again. Having the light on the actors move that quickly was tricky to achieve on set. We decided along with Jonathan Freeman, the DP for Episodes 101 and 102, to shoot that in a LED stage with a bunch of sand on the ground where we could animate hot spots and the colors of the panels even though we were going to replace all of that in CG.” Being in the realm of VR meant that the destruction could be fantastical, such as 30 million Mongol soldiers being lifted in the air because gravity no longer exists, or witnessing the entire landscape engulfed by a sea of lava. Fangmeier explains, “Then, we have some pseudoscience, like going inside of a particle accelerator. The San-Ti have sent these two supercomputers the size of a proton to stop the progress of human technology, so when they arrive 400 years later [Trisolaris is over three light years from Earth], we won’t be able to easily destroy their fleet. The proton [referred to as a sophon] unfolds into this giant two-dimensional sphere that then gets etched with computer circuitry. We talked a lot about going from 10 dimensions down to two and then going back to a 10-dimensional object. It’s stuff where you go, ‘That’s what it said in the book and script. But how do you visualize that?’”

The VR game created by the San-Ti is so sophisticated that it stimulates the five senses of users such as Jin Cheng (Jess Hong).

The VR game setting allowed for a more hyper-real visual language and the ability to defy physics, like when Sophon (Sea Shimooka) talks with Jin Cheng (Jess Hong) and Jack Rooney (John Bradley) in Episode 103.

The Follower (Eve Ridley) and Sophon (Sea Shimooka) are San-Ti appearing in human form to make it easier for VR users from Earth to relate to them.

Eiza González portrays Auggie Salazar, a member of the Oxford Five, which attempts to foil the invasion plans of the San-Ti.

Cinematographer Jonathan Freeman made use of complex and specific lighting panels for the VR setting shots to emulate what it would be like surrounded by three suns.

To preserve their species until the chaotic era gives way to a stable one, the San-Ti have a specific methodology that involves dehydrating and rehydrating their bodies. “It happens in two places and provided us with unique challenges and creative opportunities,” Kullback observes. “The first time we see it is when the rolled-up dehydrated bodies are being tossed into the water by the army to bring our characters back to life. The rolled-up bodies that get rehydrated were a prop that was designed by the prosthetics artists and looked quite beautiful. We go underwater and see the roll land and begin to unfold. The camera is below it and the sun is above the water, so you have these beautiful caustics and an opportunity for all kinds of subsurface scattering and light effects that make the image magical and ethereal and support the birthing process that it’s meant to represent. At the end of the experience, you have a beautiful nude woman who comes to the surface. Then, you find there are other nude folks who have been rebirthed. We shot in a tank at Pinewood to have the underwater shots and the shots of the woman, who is the final realization of this rebirthing. For the elements of the roll landing in the water, we did shoot one for real, but ultimately that was CG. Then the environment above the surface was fully CG. But then you go to the virtual reality game where Jin Cheng is walking with the Emperor and the Follower, and a chaotic era suddenly comes upon us, and there is no room to hide behind a rock from the immense forces of the sun getting ready to melt everybody. The Follower lies down on the ground in a vast desert with the pyramid off in the distance and has to dehydrate. That one presented a bit more of a challenge because you didn’t have the opportunity to travel around her and have these beautiful caustics. We heavily researched the footage of things dehydrating, like fruit left in the sun rotting, to try to get a look that was like how the body would deflate when it was completely sapped of water.”

Being able to digitally reconstruct sets and locations was made even more important by having a highly reflective VR headset. “The reflective headset required some photogrammetry type work while you were shooting because it was often in smaller places, and there’s some crew, all of the lighting equipment, and everything is dressed in one direction,” Gombos remarks. “You had to capture that three-dimensionally because as production turned around, you needed it for the paint-out from the other direction. We had HDRI panorama photography of that, but then we also had good spatial information about the room and how that would connect to the shot lighting we would do. We wanted to be precise, and on top of that, we often did a special reconstruction shoot after we were done. I would come in for a few hours and do the photography and LiDAR required for locations. These assets were created on the fly, so we had them to review our work but also to send off to the vendors, and they were using them in post. The 3D assets were helpful in quality-controlling the work and a good tool for orienting our teams. I could have this little 3D representation of the set and share and discuss that with the DP or director. I would say, ‘If they are here, it’s going to look like this.’ It wasn’t theoretical but quite precise.”

“One thing that was a bit different for me was that I did a lot of the concept work,” Gombos observes. “I enjoyed doing that for set extensions that then Stefen and the visual effects vendor working with him would execute.” Fangmeier is intrigued by what the viewer reaction will be beyond hardcore sci-fi fans of the books. “It’s not your typical sci-fi where you spend a lot of time in outer space or meet aliens, and it’s not an alien invasion per se. It’s the first season, so it’s fairly mellow and highbrow. It’s deals with concepts other than the stuff that people are usually used to when they watch sci-fi. I’m curious what the mainstream viewer will think about that.”

There is a core mandate no matter the project for Kullback. “If we are able to help tell the story visually in areas where you can’t photograph something, then that’s our dimension. We’re never creating eye candy for the sake of eye candy. We work hard to have everything that we do fit into the greater whole and to do it in a seamless and attractive way. And, most importantly, in a way that communicates and moves the story forward and realizes the vision of the filmmakers.”

By Trevor Hogg

Images courtesy of Pixar Animation Studios.

Searit Huluf, Writer and Director of “Self.”

With the release of “Self,” a cautionary tale about the desire to please and be accepted by others, Searit Huluf got an opportunity to showcase her filmmaking talents as part of the Pixar SparkShort program. The project was partly inspired by her parents trying to adjust to life in America after immigrating from Ethiopia, which, at the time, was ravaged by civil war.

“My mom and dad separated, so it was just my mom looking after me. I had a lot more independence because she was working a lot. I mainly stayed in the east side of Los Angeles, which became my playground. It wasn’t until I got to UCLA that I started to explore more of Los Angeles, in particular the west side, which felt like being in a different country because everything is so clean, and there were a lot more shops.”

An opportunity presented itself to visit Ethiopia right before the coronavirus pandemic paralyzed international travel. “It was our first mother/daughter trip, and I had forgotten what it was like to be under my mom again,” Huluf recalls. “While in Ethiopia, my mother was cautious because the capital of Addis Ababa is not where my people are from, which is the Tigray region. It wasn’t until we got to Mekelew where my mom’s side of the family lives that we got to relax and meet people.” Huluf watched her aunts make coffee called ‘buna’ from scratch. “After roasting the coffee, they take it to everyone to smell to say thanks before grinding. Then you have to hand-grind the roasted coffee with a mortar and pestle. My friends and I made it every day. It was so much fun.”

Participating in sports was not an affordable option growing up, so Huluf consumed a heavy dose of anime consisting of Sailor Moon, Naruto, One Piece and Bleach. What was made available to her in high school was the ability to take community college classes on computer coding and engineering through STEM [Science Technology Engineering and Mathematics] programming. “I did a website competition inside of which there was a film competition, so I did a live-action short with all of the seniors in my group, and afterward I was like, ‘I want to go to art school.’” The art school in question was the UCLA School of Theater, Film and Television where she studied screenwriting and stop-motion animation. “I was trying to figure out what is the closest I could get to animation but not have to draw, and it was stop-motion; that was the happy medium because I do love live-action and animation. My schooling was live-action, but a lot of my internships were animation; that’s how I divided it up.”

Internships included Cartoon Network and DreamWorks Animation, then Pixar came to UCLA. “I kept in contact with the recruiter and started at Pixar as an intern in production management while making films on the side,” Huluf remarks. “I am also big in the employee resource groups within Pixar. I spearheaded the first celebration of Black History Month at Pixar and decided to make a documentary where Black Pixar employees talk about what it is like to be Black in America. The 19th Amendment documentary came about because I cared about people voting for the 2020 elections. It was a way to promote Pixar fans to go out and vote by having Pixar women talk about why they should do it and the complicated history of the 19th Amendment. Documentaries are scary because you go in with what’s there and make the story in the editing room. That was a lot of fun, and I gained more confidence to be a filmmaker, and I switched back to making narrative films.”

Soul was the first high-profile project at Pixar for Searit Huluf.

“I got to work with Tippett Studio, which I love! … There’s that Pixar comfort where everybody knows each other or someone adjacent. But these were complete strangers, and there was a big age gap between us. A little bit of me was going, ‘Are they not going to respect me?’ And it was the exact opposite. They were so loving and caring.”

—Searit Huluf, Writer and Director of “Self”

Critiquing, not writing, is where Huluf excels. “I went to a talk where a writer said that you have to wear different hats when you’re writing. When you’re wearing the writing hat, you’re writing all of your thoughts and ideas. Once you’re done writing, you put on the critique hat, and that’s where you start editing what you wrote. Is this actually good? Is it going to help your story? Is your structure right? You can’t wear both hats at the same time. I think a lot about that when I write. What is also great is that I went to UCLA and did screenwriting. I’m still in touch with all my screenwriting friends, and everyone is still writing. It’s nice to write something and the next week we do a writing session together and talk about the things that we’re writing.” Two individuals standout for their guidance, she says. “I still keep in touch with my UCLA professor, Kris Young, and am part of the Women in Animation mentorship program; [director] Mark Osborne is my mentor. It’s nice talking with him. He did Kung Fu Panda and The Little Prince. Mark is doing everything I want to do with my life! He’s doing live-action and animation. In this mentorship program, other women are working on their own projects. One Saturday we have it with him and the other Saturday is just us. That has been great.”

“Self” was inspired by Searit Huluf desiring to gain social acceptance as well as by the struggles her parents faced immigrating to America from Ethiopia.

“Self” marks the first time since WALL-E that live-action elements have been integrated with computer animation by Pixar.

Soul afforded Huluf the opportunity to work with one of her role models, writer/director Kemp Powers, who co-directed Soul.

Spearheading the first celebration of Black History Month at Pixar, Huluf went on to serve as a cultural consultant on Soul.

Searit Huluf helped to facilitate brainstorming sessions to make sure that there was cultural authenticity to the story, character designs and animation for Soul.

“[Director] Mark [Osbourne] is doing everything I want to do with my life! He’s doing live-action and animation. In this mentorship program, other women are working on their own projects. One Saturday we have it with him and the other Saturday is just us. That has been great.”

—Searit Huluf, Writer and Director of “Self”

Huluf has a support network at Pixar. “Luckily for me, I’m not the first Black shorts director at Pixar. Aphton Corbin made “Twenty Something,” so it‘s nice to be able to talk to her about it. Michael Yates did the Win or Lose streaming [series for Disney+], and I keep regular contact with Kemp Powers. It’s nice to talk to people who are in your arena. Personally, too, that’s why I do both live-action and animation, because there’s something about both mediums that gives me motivation and hope.”

Like Mark Osborne with The Little Prince, Huluf was able to combine computer animation and stop-motion to make “Self,” where the protagonist is a wooden puppet surrounded by environments and metallic characters created digitally. “I got to work with Tippett Studio, which I love! I studied stop-motion at UCLA, so I know what the process looks like, but I have never done it in a professional setting, and I’m not the animator; other people are doing this who have worked on James and the Giant Peach and The Nightmare Before Christmas. There’s that Pixar comfort where everybody knows each other or someone adjacent. But these were complete strangers, and there was a big age gap between us. A little bit of me was going, ‘Are they not going to respect me?’ And it was the exact opposite. They were so loving and caring. I still text with them.”

“I spearheaded the first celebration of Black History Month at Pixar and decided to make a documentary where Black Pixar employees talk about what it is like to be Black in America. The 19th Amendment documentary came about because I cared about people voting for the 2020 elections. It was a way to promote Pixar fans to go out and vote by having Pixar women talk about why they should do it and the complicated history of the 19th Amendment.”

—Searit Huluf, Writer and Director of “Self”

Going through various characters designs for the character of Self.

A significant lesson was learned when making “Self.” “I did a lot of my independent films by myself, and this time I had people who are paid and wanted to be involved,” Huluf notes. “Working with the animators was one of the most insightful moments for me. I would film myself and say, ‘How about we do this?’ They would be like, ‘We could do that, but how about this?’ And it was so much better. In the beginning, I was very precious about it and slowly realized, ‘They know what this film is and what needs to be told, too.’ It was a learning curve for me.” The transition to feature directing is more likely to first occur in live-action rather than animation. “That’s primarily because the stakes are higher in animation than a live-action film. This is purely based on budgets.”

A comparison of Self with one of the female Goldies.

A personal joy for Huluf was being able to design the costume for Self.

“When I think about filmmakers I look up to, I see that they start with smaller indie features. Barry Jenkins is a perfect example. Moonlight was only a couple of million dollars, and then he made a higher-ground film If Beale Street Could Talk. I want to start small and slowly build myself up. The big jump for me now is to do a feature. Luckily for me, I’m not too intimidated to do it. It’s more about when someone will give me the chance. I do believe in my ideas and storytelling capabilities. Right now, I’m writing and seeing how things go. I look forward to people watching ‘Self’ and being able to talk to them about it because that’s something new for me.”

Tippett Studio Senior Art Director and Lead Puppeteer Mark Dubeau explains the puppet design to Searit Huluf.

The hair of Self was the hardest aspect to get right. It was inspired by the hairstyle of Searit Huluf.

A dream come true for Huluf was being able to collaborate with Tippett Studio on “Self.”

Showcasing the detailed eyeballs for the stop-motion puppet crafted by Tippett Studio.

Pixar SparkShorts Build “Self” Esteem for Emerging Filmmakers

Treading a path blazed by WALL-E where live-action footage was incorporated into the storytelling, the Pixar SparkShort “Self,” conceived by Searit Huluf, revolves around a wooden stop-motion puppet desperate to be accepted into a society of metallic beings.

“For me, it was, ‘I really want to do stop-motion. I want to visually see something alive onscreen that you can see the handprint of a human touching it,” Huluf states. “I wanted the story to be the reason it had to be stop-motion.”

A central theme is the personal cost of gaining social acceptance. “I will play this game in my head of hiding parts of myself so I can conform and be part of the group,” Huluf explains. “That’s how I visualized Self as she literally rips herself apart to be like everyone else. The other aspect is my mom immigrated to America from Ethiopia, and I wanted to talk about how immigrants are usually not seen or heard. I wanted Self to feel like she is Ethiopian, so she has natural wood that has been carved by a masterful craftsman. There is something nice about her being so natural herself but wanting to be something so shiny, plastic and fake. There is something visually beautiful about that. Another layer on top is that she is even animated differently. Self is stop-motion, so she’s animated on 2s and 3s versus the CG Goldies, which are on 1s and are so slick when they move. Self is poppy and jumpy at points when she tries to talk and interact with them.”

Excitement and fear were felt when working out the logistics for the project. “I was excited about doing something so different and unique, but at the same time I had no idea of how you properly schedule out and manage a stop-motion film,” remarks Eric Rosales, Producer of “Self.” “I was like, ‘Alright, let’s learn this on the fly.’ You’re taking this whole new element and trying to fit pieces into our puzzle and take their puzzle pieces and put them all together.” The other puzzle pieces belonged to Tippett Studio which constructed, animated and shot the stop-motion puppet. Rosales says, “It was a breath of fresh air in the sense that you get to see how other studios approach their scheduling, decision-making and problem-solving. It was exciting for us to learn from them as much as they were learning from us, and learn how to take the different aspects of the stop-motion process and incorporate it into our pipeline. And vice versa, how we would handle something and transfer that information back over to Tippett. We did a lot of back and forth with them and shared a lot of thoughts.”

Complimenting and informing the design of the physical puppet was the digital version. “We had a digital puppet that Pixar was able to move around in the computer and act out what they wanted the puppet to do. That informed us in terms of how we needed to build the puppet to be able to effectively move in those ways,” states Mark Dubeau, Senior Art Director and Lead Puppeteer at Tippett Studio. “There is a lot you can do digitally that you can’t do with a puppet, and so we knew probably that we would have to build about three or four puppets to be able to do that number of shots.” Nine different faces were constructed to express panic, sadness, happiness and anger.

For a long time, the digital double of Self was a placeholder for 19 shots that utilized stop-motion animation. “But as things progressed, we turned off our character as she is now being added in the comp,” states Nathan Fariss, Visual Effects Supervisor of “Self.” “The amount of color tweaking and general polish that was happening in comp, and even the color grading steps in post, were much more than any of our other projects because we needed to match a photographic element to our CG world and vice versa.”

“Self” Producer Eric Rosales and Huluf examine the various pieces that go into making a stop-motion puppet.

Various body parts and variations had to be created by Tippett Studio to give the stop-motion puppet the correct range of physicality and emotion.

Previs and layout dictated the shot design for the stop-motion scenes. “We had a first lighting pass that was already done and even before Tippett started lighting everything up,” Rosales remarks. “We sent members of our lighting team over there to do the last bits of tweaking. Searit acted out every single shot that Tippett was going to do. She did it in her living room by herself. To sell the foot contact, Tippett ended up building a concrete slab out of Styrofoam so we were able to see Self physically walking on top of something.”

Self makes a wish upon a falling star that enables her to exchange wooden body parts with metallic ones. “I usually talk about what the character is feeling at the moment,” Huluf states. “The way we talked about that scene of her jumping off of the roof, I wanted to show how she goes from, ‘Oh, cool these body pieces are falling from the sky,’ to slowly becoming more obsessive in finding them. That face is the last piece for her. ‘I’m going to finally belong.’ A lot of people do a lot of crazy things to belong. In Self’s case she’ll rip herself apart to be like everyone. Self-jumping off of the roof is the climax of the film because it’s her craziness and obsessiveness all wrapped into one as she falls into darkness. We had a lot of conversations about how she snaps out of it, and for me, your face is who you are. As she steps on her own face, it snaps her back into reality and makes her realize and go, ‘Oh, my God! Why did I do this?’”

The cityscape did not have to be heavily detailed. “We ended up settling up a look that was a flat color or a gradient so it felt like there was a little bit of life in the city and things were lit up,” Fariss reveals. “There were other people present in the buildings, but it didn’t necessarily draw the audience into the lives that are going on in the buildings around there. The cities were mostly hand-built. There wasn’t enough scope to warrant going a procedural route to put the cities together, so they were hand-dressed, and there was a lot of shot-by-shot scooting some buildings around to get a more pleasing composition.”

More problematic was getting the hair right for the puppet. States Dubeau, “Once we figured out what urethane to use then we did all of the hair. However, we found out it was too heavy for the head. We had to go back and make two pieces of hair that go down and frame either side of her face. Those were made out of that material and painted. We hollow-cast the ones on the back, which had a wire that went into the head, and then you could move those pieces around, but you couldn’t bend them. The ones in front could swing and twist. It totally worked. Now you got the sense of this light, fluffy hair that was bouncing around on her head.”

“Self” was an educational experience. “One of the things that we learned from Lisa Cooke [Stop-Motion Producer] at Tippett is you end up saving your time in your shot production,” Rosales notes. “It’s all of the pre-production and building where you’re going to spend the bulk of your money. There was a lesson in patience for us because with CG we can take everything up to the last minute and say, ‘I want to make this or that change.’ But here we needed to zero in and know what we’ve got going on. Once the animators get their hands on the puppet and start doing the shots, the first couple of shots take a little bit of time. After that handful of shots, they get a feel for the character, movement and puppet, and it starts moving quickly. Then we were able to get our team on, and they were able to start learning their cadence as well. It started becoming a nice little machine that we were putting together.”

Searit appreciated the collaborative spirit that made the stop-motion short possible. “I’m approving things at Tippett and going back to Pixar to approve all of the CG shots multiple times a week. We had a lot of people who were big fans of ‘Self’ and helped us while they were on other shows or even on vacation or working on the weekend because they were so passionate. I’m grateful that Jim Morris [President of Pixar] let me have this opportunity to make a stop-motion film, which has never been done before at Pixar.”

—Trevor Hogg

By TREVOR HOGG

Images courtesy of FX.

Actor Hiroyuki Sanada had a key role in making sure that period-accurate Japanese was spoken by the characters.

Inspired by the power struggle in feudal Japan that led to the rise of Tokugawa Ieyasu to Shōgun and his relationship with English sailor William Adams, who became a key advisor, James Clavell authored the seminal historical fiction novel Shōgun, adapted into a classic NBC miniseries starring Richard Chamberlain and Toshiro Mifune. Forty-four years later, the story has been revisited by FX and Hulu as a limited 10-episode production under the creative guidance of Justin Marks and Rachel Kondo.